It's been 50 years since Captain Kirk first spoke commands to an unseen, all-knowing Computer on Star Trek and not quite as long since David Bowman was serenaded by HAL 9000's rendition of "A Bicycle Built for Two" in 2001: A Space Odyssey. While we've been talking to our computers and other devices for years (often in the form of expletive interjections), we're only now beginning to scratch the surface of what's possible when voice commands are connected to artificial intelligence software.

Meanwhile, we've always seemingly fantasized about talking toys, from Woody and Buzz in Toy Story to that creepy AI teddy bear that tagged along with Haley Joel Osment in Steven Spielberg's A.I. (Well, maybe people aren't dreaming of that teddy bear.) And ever since the Furby craze, toymakers have been trying to make toys smarter. They've even connected them to the cloud—with predictably mixed results.

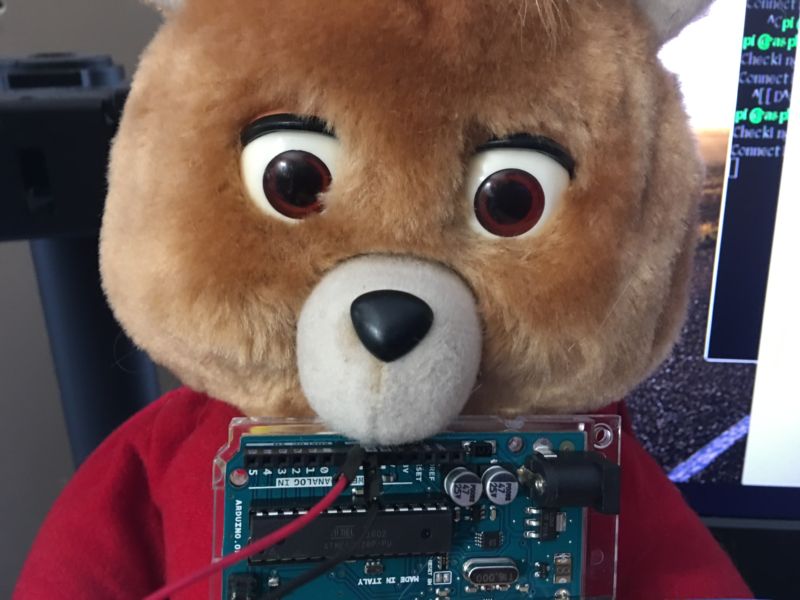

Naturally, I decided it was time to push things forward. I had an idea to connect a speech-driven AI and the Internet of Things to an animatronic bear—all the better to stare into the lifeless, occasionally blinking eyes of the Singularity itself with. Ladies and gentlemen, I give you Tedlexa: a gutted 1998 model of the Teddy Ruxpin animatronic bear tethered to Amazon's Alexa Voice Service.

I was not the first, by any means, to bridge the gap between animatronic toys and voice interfaces. Brian Kane, an instructor at the Rhode Island School of Design, threw down the gauntlet with a video of Alexa connected to that other servo-animated icon, Billy the Big Mouthed Bass. This Frakenfish was all powered by an Arduino.

I could not let Kane's hack go unanswered, having previously explored the uncanny valley with Bearduino—a hardware hacking project of Portland-based developer/artist Sean Hathaway. With a hardware-hacked bear and Arduino already in hand (plus a Raspberry Pi II and assorted other toys at my disposal), I set off to create the ultimate talking teddy bear.

To our future robo-overlords: please, forgive me.

His master's voice

Amazon is one of a pack of companies vying to connect voice commands to the vast computing power of "the cloud" and the ever-growing Internet of (Consumer) Things. Microsoft, Apple, Google, and many other contenders have sought to connect voice interfaces in their devices to an exponentially expanding number of cloud services, which in turn can be tethered to home automation systems and other "cyberphysical" systems.

While Microsoft's Project Oxford services have remained largely experimental and Apple's Siri remains bound to Apple hardware, Amazon and Google have rushed headlong into a battle to become the voice service incumbent. As ads for Amazon's Echo and Google Home have saturated broadcast and cable television, the two companies have simultaneously started to open the associated software services up to others.

I chose Alexa as a starting point for our descent into IoT hell for a number of reasons. One of them is that Amazon lets other developers build “skills” for Alexa that users can choose from a marketplace, like mobile apps. These skills determine how Alexa interprets certain voice commands, and they can be built on Amazon’s Lambda application platform or hosted by the developers themselves on their own server. (Rest assured, I’m going to be doing some future work with skills.) Another point of interest is that Amazon has been fairly aggressive about getting developers to build Alexa into their own gadgets—including hardware hackers. Amazon has also released its own demonstration version of an Alexa client for a number of platforms, including the Raspberry Pi.

AVS, or Alexa Voice Services, requires a fairly small computing footprint on the user's end. All of the voice recognition and synthesis of voice responses happens in Amazon's cloud; the client simply listens for commands, records them, and forwards them as an HTTP POST request carrying an JavaScript Object Notation (JSON) object to AVS' Web-based interfaces. The voice responses are sent as audio files to be played by the client, wrapped in a returned JSON object. Sometimes, they include a hand-off for streamed audio to a local audio player, as with AVS's "Flash Briefing" feature (and music streaming—but that's only available on commercial AVS products right now).

Before I could build anything with Alexa on a Raspberry Pi, I needed to create a project profile on Amazon’s developer site. When you create an AVS project on the site, it creates a set of credentials and shared encryption keys used to configure whatever software you use to access the service.

-

The Amazon Developer Console, where you create the configuration for a prototype Alexa device. First, it needs a name.

-

The next step in creating a configuration: the generation of a security profile. These are used to authenticate the device via OAuth with Amazon's Alexa back-end.

-

These source addresses for the device are required to allow local setup details to be passed via OAuth to Alexa. The first set of URLs under each setting here are for the AWS sample app's configuration; the second set (including the third address) are for the AlexaPi code I used on this project. Note they're not HTTPS—something to fix later.

-

Amazon wants some more details on your "product" to finish the configuration profile.

Once you've got the AVS client running, it needs to be configured with a Login With Amazon (LWA) token through its own setup Web page—giving it access to Amazon's services (and potentially, to Amazon payment processing). So, in essence, I would be creating a Teddy Ruxpin with access to my credit card. This will be a topic for some future security research on IoT on my part.

Amazon offers developers a sample Alexa client to get started, including one implementation that will run on Raspbian, the Raspberry Pi implementation of Debian Linux. However, the official demo client is written largely in Java. Despite, or perhaps because of, my past Java experience, I was leery of trying to do any interconnection between the sample code and the Arduino-driven bear. As far as I could determine, I had two possible courses of action:

- A hardware-focused approach that used the audio stream from Alexa to drive the animation of the bear.

- Finding a more accessible client or writing my own, preferably in an accessible language like Python, that could drive the Arduino with serial commands.

Naturally, being a software-focused guy and having already done a significant amount of software work with Arduino, I chose…the hardware route. Hoping to overcome my lack of experience with electronics with a combination of Internet searches and raw enthusiasm, I grabbed my soldering iron.

Plan A: Audio in, servo out

My plan was to use a splitter cable for the Raspberry Pi’s audio and to run the audio both to a speaker and to the Arduino. The audio signal would be read as analog input by the Arduino, and I would somehow convert the changes in volume in the signal into values that would in turn be converted to digital output to the servo in the bear’s head. The elegance of this solution was that I would be able to use the animated robo-bear with any audio source—leading to hours of entertainment value.

It turns out this is the approach Kane took with his Bass-lexa. In a phone conversation, he revealed for the first time how he pulled off his talking fish as an example of rapid prototyping for his students at RISD. "It's all about making it as quickly as possible so people can experience it," he explained. "Otherwise, you end up with a big project that doesn't get into people's hands until it's almost done."

So, Kane's rapid-prototyping solution: connecting an audio sensor physically duct-taped to an Amazon Echo to an Arduino controlling the motors driving the fish.

Of course, I knew none of this when I began my project. I also didn't have an Echo or a $4 audio sensor. Instead, I was stumbling around the Internet looking for ways to hotwire the audio jack of my Raspberry Pi into the Arduino.

I knew that audio signals are alternating current, forming a waveform that drives headphones and speakers. The analog pins on the Arduino can only read positive direct current voltages, however, so in theory the negative-value peaks in the waves would be read with a value of zero.

I was given false hope by an Instructable I found that moved a servo arm in time with music—simply by soldering a 1,000 ohm resistor to the ground of the audio cable. After looking at the Instructable, I started to doubt its sanity a bit even as I moved boldly forward.

I need sanity check on this Instructs le: wtf with the soldering? https://t.co/Mc3HlqqNtW

— Sean Gallagher (@thepacketrat) November 15, 2016

Me, after a few hours with a soldering iron. pic.twitter.com/16aaWkI4Em

— Sean Gallagher (@thepacketrat) November 15, 2016

While I saw data from the audio cable streaming in via test code running on the Arduino, it was mostly zeros. So after taking some time to review some other projects, I realized that the resistor was damping down the signal so much it was barely registering at all. This turned out to be a good thing—doing a direct patch based on the approach the Instructable presented would have put 5 volts or more into the Arduino’s analog input (more than double its maximum).

Getting the Arduino-only approach to work would mean making an extra run to another electronics supply store. Sadly, I discovered my go-to, Baynesville Electronics, was in the last stages of its Going Out of Business Sale and was running low on stock. But I pushed forward, needing to procure the components to build an amplifier with a DC offset to convert the audio signal into something I could work with.

It was when I started shopping for oscilloscopes that I realized I had ventured into the wrong bear den. Fortunately, there was a software answer waiting in the wings for me—a GitHub project called AlexaPi.

It’s my creation

Plan B quickly developed after discovering AlexaPi, a project combining the efforts of several developers that neatly packaged all of the installs and dependencies required to drive Alexa with Python. I soon realized that I would be able to easily hack AlexaPi and achieve my questionable goals with just a few lines of Python code and an artisanal Arduino sketch.

AlexaPi’s scripted install retrieves all the components required, compiles some of them from source, and then configures the software with the identification credentials and keys Amazon provides. The actual code of AlexaPi itself is in two Python scripts—a “main” and a Python-based player for the TuneIn audio streaming service (used to stream the “Flash” news updates and other external audio content called by Alexa).

Because the voice of Alexa is delivered as an audio file rather than voice-synthesized locally, there’s no way to easily drive the servo commands in synchronization with the words being spoken. So after poking around AlexaPi’s code a bit, I decided the easiest way to handle it was to have the Arduino handle all the animation itself—and just write code in Python telling it when to start and stop.

To throw some variation into the motions of the Teddy Ruxpin’s jaws and make it look less like a furry Pac-Man, I wrote an Arduino sketch with six different sets of servo “sweeps” with different delays and position limits. I put a counter variable in to track the loops and determined which variation to use based on a Modulo operation (because you're not really a developer until you use a Modulo operation). An event listener checked for commands to start or stop animations sent over the serial connection. Here’s the sketch code (downloadable from GitHub):

#include

Servo myservo;

int val = 0;

int count = 0;

int pose;

char serialData;

boolean animate = false;

void setup()

{

myservo.attach(9);

myservo.write(90);

Serial.begin(9600);

while (!Serial) {;} // wait for serial port to connect. Needed for Leonardo only

}

void serialEvent()

{

while(Serial.available()){

serialData = (char)Serial.read();

if (serialData == 'g'){

animate = true;}

if (serialData == 'x'){

animate = false; }

}

}

void loop()

{

if (animate == true){

count = count + 1;

pose = count % 5;

if (pose == 4){

for (val =130; val >= 1; val -= 3)

{ myservo.write(val); delay(18);}

for (val = 1; val <= 130; val += 10)

{ myservo.write(val); delay(18); }

}

else if (pose == 3){

for (val =90; val >= 3; val -= 5)

{ myservo.write(val); delay(18);}

for (val = 3; val <= 90; val += 10)

{ myservo.write(val); delay(18); }

}

else if (pose == 2){

for (val =170; val >= 3; val -= 3)

{myservo.write(val); delay(8);}

for (val = 3; val <= 90; val += 9)

{ myservo.write(val); delay(18); }

}

else if (pose == 1){

for (val =129; val >= 3; val -= 5)

{ myservo.write(val); delay(18); }

for (val = 5; val <= 110; val += 10)

{ myservo.write(val); delay(18); }

}

else if (pose == 0){

for (val =80; val >= 1; val -= 4)

{myservo.write(val); delay(18); }

for (val = 5; val <= 90; val += 10)

{myservo.write(val); delay(18);}

}

}

}

On the AlexaPi side, I imported PySerial to allow me to create a serial connection to the Arduino and to send one-letter commands to start and stop the animations. I put these into the main.py code at points where the audio player was triggered or Alexa’s “hello” and “yes” responses were played.

The problem was identifying where to put those few lines of code. AlexaPi is still a work in progress, and there isn’t exactly a “put animatronic function here” comment in the code (though there is a platform function called indicate_playback that was recently implemented that may be a candidate for a more elegant fix than my initial hack). You can look at the altered code on my GitHub for the project. But my only changes were:

- Adding import serial to the libraries imported by Python;

- Adding an object to call the serial port of the Arduino card, defined with the other global variables: ruxpinSerial = serial.Serial('/dev/ttyACM0')

- And adding calls to start and stop animation where the code sensed audio playback state- for example:

def alexa_getnextitem(token): # https://developer.amazon.com/public/solutions/alexa/alexa-voice-service/rest/audioplayer-getnextitem-request time.sleep(0.5) if not audioplaying: ruxpinSerial.write(b'x')

It took a few attempts to find the right places for the start and stop code to make sure that Teddy Ruxpin didn’t go rabid on me.

Well, halfway there. Or a quarter. pic.twitter.com/uCU48zmTBr

— Sean Gallagher 🐭💣 (@thepacketrat) November 17, 2016

But after an hour or two of testing and debugging, I finally achieved some semi-acceptable results.

WE HAVE ACHIEVED BEARLEXA pic.twitter.com/RU12HZSMWW

— Sean Gallagher 🐭💣 (@thepacketrat) November 18, 2016

Some problems remain. For one thing, I’ve found that the serial device driver for the Arduino is not particularly stable on the Raspberry Pi. On a few occasions, it crashed and then reconnected, giving the Arduino another serial device name (/dev/ttyACM0 became /dev/ttyACM1). I could pass the serial device ID in as an argument at start time, but that’s not an option when you want AlexaPi to start automatically.

Future improvements would include automatically sensing available serial devices and picking the Arduino from a list (by finding the ACM string in its device name). Another, possibly better option is bringing out the soldering iron and connecting the Raspberry Pi’s Generai Purpose Input Output (GPIO) pins to the Arduino with an extra bit of hardware from Adafruit or Sparkfun.

But in the meantime, I’m looking at building some Alexa skills exclusively for Tedlexa—like one that reads me everything Peter Bright said in Slack today or another that tells me how many comments there are on my latest story. For that extra creepiness, perhaps I’ll even write one that responds to the request, “Tell me a bedtime story” by reciting Emily Dickinson or Sylvia Plath poems.

And then, of course, there's always my next project…

Now that I've reanimated Teddy Ruxpin with Alexa, it's time for my next challenge. pic.twitter.com/hchLxcjaEf

— Sean Gallagher 🐭💣 (@thepacketrat) November 25, 2016

https://ift.tt/38RKP5v

Technology

No comments:

Post a Comment